Staying a Float

Problem Set-Up

I designed a take-home-challenge for data engineering candidates and consistently

encountered problems when it came to the conversion float to int values.

The problem involved converting decimal dollar values to integer cents values.

Typically, candidates would approach this problem as follows:

dollars = 0.29

cents = int(dollars * 100)If we didn’t know any better, we might naively assume that cents == 29.

After all, this is arithmetically correct. Many people are surprised to

discover that, as it is written above, cents == 28. The reasons for this revolve

around the way that the float type is encoded in binary. This post will dive

in to this concept a bit further and present two easy workarounds.

A Word About Floats

Let’s again take a look at our example from above.

>>> dollars = 0.29

>>> as_cents = lambda e: int(e * 100)If we call our as_cents function on dollars, we get the following result:

>>> as_cents(dollar_value)

28The float representation of dollars * 100 makes it a bit more clear

why we do not arrive at our expected result.

>>> dollar_value * 100

28.999999999999996The subtlety here is that a float is a binary approximation

of a decimal number. Specifically, at the single-precision level, 32 bits of data

are used to encode three individual components that when put together output

the closest approximation of the target number.

Casting Numbers as Floats

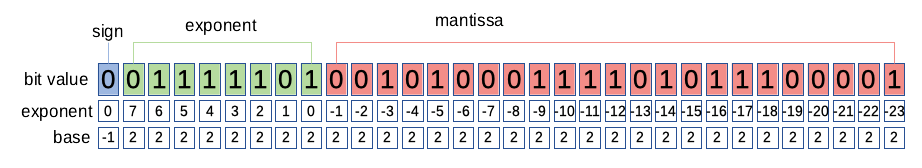

The 32 bits used to represent a float are segmented into three groups

- Sign { | 1 bit}

- Exponent { | 8 bits}

- Mantissa { | 23 bits}

They are combined in the following formula to cast a single float value .

Let’s use the formula to figure out how to cast our starting value of 0.29 as a 32 bit float!

We can solve for each value in three simple steps:

Here is a visual showing how each of these components get coded in a 32-bit binary array:

In order to convert the sequence of bit values to a number, simply sum the three dot products for each component. Let’s take a look at how this is done for the mantissa:

>>> bit_str = '00101000111101011100001'

>>> sum([2 ** (- (i + 1)) * int(b) for i, b in enumerate(bit_str)])

0.15999996662139893Aha! So we are unable to get to an exact representation of 0.16 with the 23 bits we

are given. It is slightly less than our target of 0.16, meaning that when we call int

on the output of dollars * 100, we will end up rounding down.

Precise Manipulation of Decimal Numbers

Equipped with our new knowledge of floats, what is an aspiring Pythonista to do? There are two easy workarounds here:

Round before converting to int:

>>> dollars = 0.29

>>> as_cents = lambda e: int(round(e * 100))

>>> as_cents(dollars)

29Cast as Decimal

>>> from decimal import Decimal

>>> dollars = Decimal('0.29')

>>> as_cents = lambda e: int(e * 100)

>>> as_cents(dollars)

29Python has a whole decimal library

just for dealing with situations where precision is key.

Wrap-Up

That’s it! I hope this post helped to illuminate some of the inter-workings of the float

type and how to deal with these values when it is important to output exact results,

or when converting to other data types. Happy data engineering folks.